Echoview user in the spotlight

We are delighted to present the outstanding research and accomplishments of our Echoview users worldwide through our 'In the Spotlight' series. This series highlights their insights and experiences, showcasing innovative work in hydroacoustics and sharing valuable knowledge with our user community.

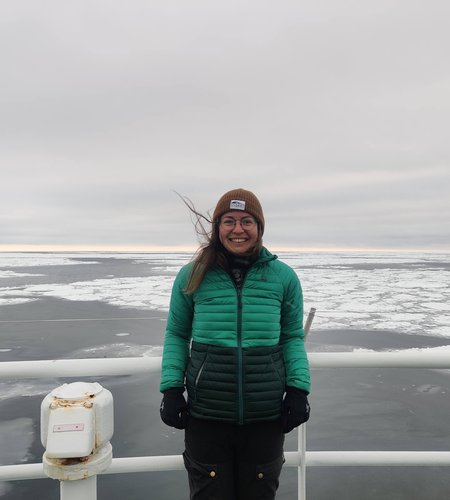

In our latest instalment, we were thrilled to catchup with Dr. Muriel Dunn, a Research Scientist at SINTEF Ocean in Trondheim, Norway. Muriel is working on methods that combine observational data streams from surface data buoys and remotely operated vehicles to better understand the ocean. She successfully defended her PhD in Fisheries Science in December 2023, on her doctoral thesis, “Advancing active hydroacoustic methods with broadband echosounders for ecological surveys”.

We are grateful for the opportunity to learn more about her work and to share her story. Thank you, Muriel.

What is your research topic?

My research has been centered around using broadband echosounders for spectral-based classification with validation through a series of mesocosm experiments (AZKABAN/AFKABAN, Arrested Zooplankton/Fish Kept Alive for Broadband Acoustic Net experiments).

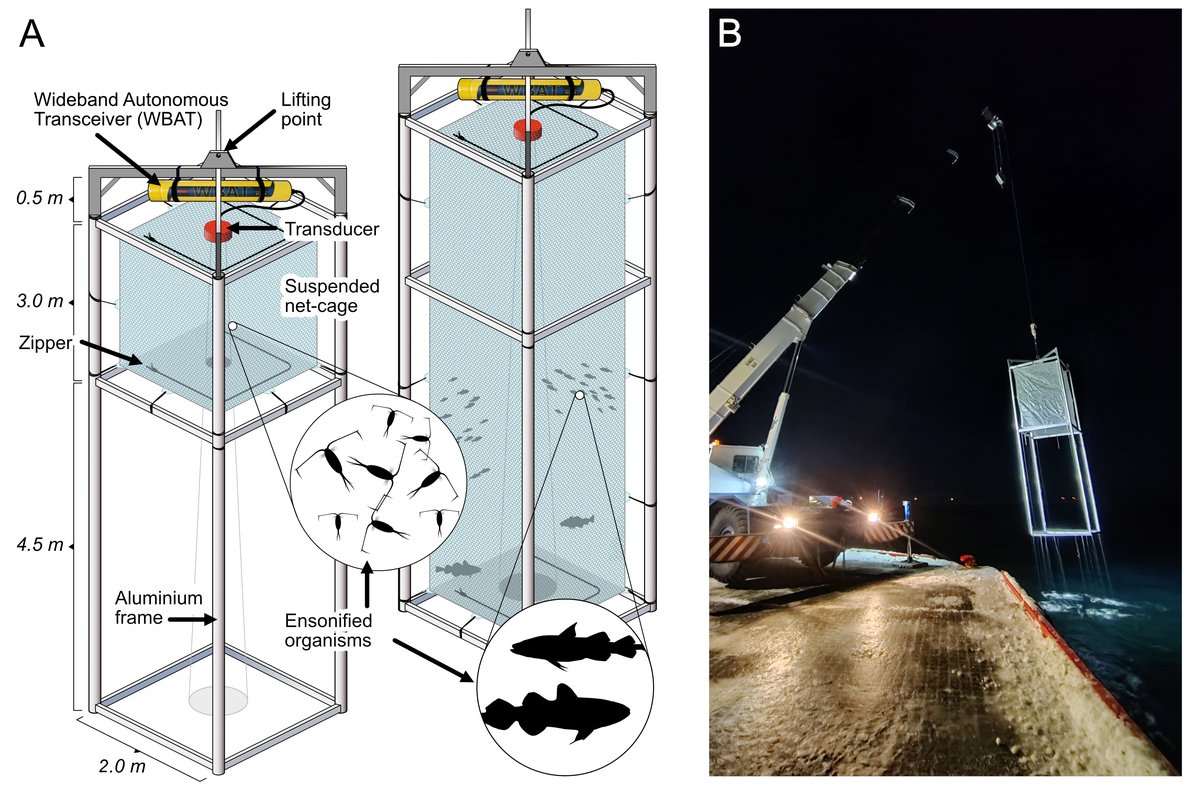

This image demonstrates the set-up of the AZKABAN/AFKABAN experiments (diagram by Tom Langbehn, University of Bergen).

During these experiments, we introduced a live community of zooplankton or a few single species of fish in a submerged mesocosm (8m deep by 2m wide and 2m long) and let them swim freely inside for hours. While they were swimming, we recorded thousand of individual acoustic target detections allowing us to characterize individual tracks and study the range of target strength spectra measured from an individual swimming through the acoustic beam.

Live zooplankton are added to the mesocosm via a zipper. Photo taken by Randall Hyman -www.randallhyman.com.

The zooplankton were classified using a training dataset created by sound scattering models, whereas the fish classifiers were trained using the mesocosm detections and compared between different species. Much of this work was done in close collaboration with Dr. Chelsey McGowan-Yallop, who also conducted the experiments and developed the classification code.

All of my work during my PhD has been done using SIMRAD EK80, either the battery-powered WBAT or WBT Mini, from Kongsberg Discovery. I have also previously used BioSonics’ submersible echosounder for my Master’s research. Currently, I am working with the OceanLab Marine Observatory equipped with Nortek’s Signature100.

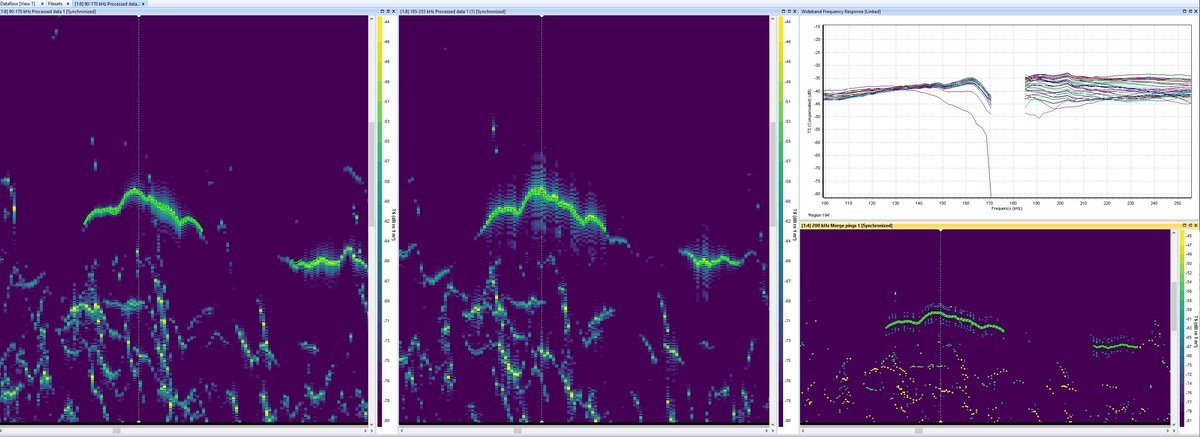

Data from the AZKABAN experiment were processed in Echoview to detect single targets, track the detected targets, and calculate their wideband frequency response.

How has Echoview assisted your research?

The most valuable assistance I have received from Echoview has been through the courses. I took the Clean Sweep course on noise with Dr. Toby Jarvis and the Automation course with Dr. Haley Viehman.

The Clean Sweep course was very useful because hydroacoustic data is inherently susceptible to noise and attenuation. It was very valuable to learn to identify noise in an echogram, associate the noisy unwanted contribution with a type of noise and learn techniques to mitigate the source of noise and best practices to clean the data.

"This type of hands-on experience and knowledge can save you a lot of time when working with a new noisy echosounder set up."

I mainly work with large continuous hydroacoustic datasets from buoys or autonomous vehicles. Though looking through files a handful at a time is very informative and helps determine a processing routine, the automation course got me started with letting the computer do the repetitive work and reduce the subjectivity in my processing routines. I still frequently use the example scripts from the course for data exploration and processing of new datasets.

What have been your key findings?

The key finding from the mesocosm experiments overall was that target strength spectra are very variable, across a population and even within an individual swimming across the acoustic beam. The variability can be leveraged for spectral-based classification as in the case of AFKABAN (Chapter 4 in Dunn, 2024), where we found that two fish species and shrimp had distinct enough target strength spectra variability for successful spectral-based classification. Otherwise, the variability can be confounding, as in the case of AZKABAN, where we found that classification algorithms trained with the modeled target strength spectra of individual zooplankton were not able to discriminate between in situ detections of zooplankton (Dunn et al., 2023). I look forward to continuing to study the potential of spectral-based classification and finding its limitations.

Photo credit: Dr. Chelsey McGowan-Yallop.

How did you get started working with echosounders?

After my Bachelor’s in physics and physical oceanography, I worked on ground fish trawlers as a Fisheries Observer on the west coast of Canada. When I wasn’t counting fish, I liked to spend time in the wheelhouse learning about how they determined where to set the nets and was introduced to the sonar systems they used that way. I thought there must be a way to extract more information from the acoustics to have more certainty on the species identification in a dense layer of fish and reduce the risk of trawling in an aggregation of bycatch, non-targeted species that are thrown back into the sea. After that I studied physical oceanography with a focus on acoustics during my Master’s. There, I started using an echosounder to validate fish detection with an acoustic Doppler current profiler, an instrument used to measure currents (Dunn and Zedel, 2022).

To read more of Dr. Muriel Dunn’s research

Muriel Dunn, Len Zedel (2022). Evaluation of discrete target detection with an acoustic Doppler current profiler. Limnology and Oceanography: Methods.

Muriel Dunn, Chelsey McGowan-Yallop, Geir Pedersen, Stig Falk-Petersen, Malin Daase, Kim Last, Tom J Langbehn, Sophie Fielding, Andrew S Brierley, Finlo Cottier, Sünnje L Basedow, Lionel Camus, Maxime Geoffroy (2023). Model-informed classification of broadband acoustic backscatter from zooplankton in an in situ mesocosm. ICES Journal of Marine Science.

Muriel Dunn (2024). Advancing active hydroacoustic methods with broadband echosounders for ecological surveys. Doctoral (PhD) thesis, Memorial University of Newfoundland.

Echoview user story contribution

Do you have an Echoview user story, photo, or anecdote you wish to share? You can participate by emailing us at info@echoview.com.