Have you ever wondered what work goes into ensuring Echoview’s reliability and accuracy?

As the industry leading hydroacoustic data processing software, we take pride in ensuring its high quality for our users.

With Echoview 13 being our biggest and greatest release ever, we thought it was a great opportunity to spotlight the Quality Assurance Team and the vast amount of work they perform continuously, and especially in the lead up to a major release.

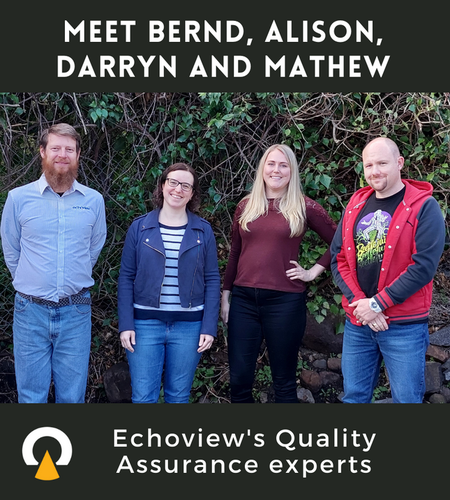

Introducing our four-person super team that regularly tries to break Echoview! Every day they meticulously scour the software by checking the results of more than 2,400 automated regression tests that cover over 1,000,000 lines of code, as well as finding unusual and abstract pathways to test Echoview’s robustness.

Their hugely important role directly supports our clients by ensuring that Echoview continues to process hydroacoustic data with confidence.

As we prepare for the release of Echoview 13, we sat down with the Team Leader of Quality Assurance, Alison Wilcox, to find out more about her team and what their work involves.

Q) What are the responsibilities of the Quality Assurance (QA) team?

Part of our job is to work closely with our programming team to assist in the design process for any new features. This helps us to set up a plan which we can then use to test changes made by our developers.

We also make improvements to testing processes, including our automated regression test program, and create new automated tests to detect future changes.

There are more general test plans as well, which we set up, modify, and run to help us find unexpected side effects of recent changes.

Any issues we discover need to be repeatable, so we will spend time tracking them down and writing steps so that developers can investigate and fix them. Sometimes we assist the support team in tracking down problems that customers have found as well.

Q) What type of QA Testing does your team do?

We regularly compare results from Echoview against other software, which can be for a specific use like software from an echosounder manufacturer, or more general spreadsheet software that we use to recreate algorithms. Sometimes we compare results from a different Echoview feature or version to verify a change.

Tests can often be scripted as well, and we usually do this using Python or Microsoft’s Visual Basic Script. This can make it easier to check performance, but it is also helpful in many other situations.

"We spend a lot of time within our own software, creating unusual scenarios that may provoke issues, and setting up test cases that try to look at things from the perspective of our customers."

For example, checking how easy it is to find and use a new feature.

Another aspect of our work involves building on our automated regression test software, which may be simply adding a new test that exports data or developing the software further so that it can be used to test a new feature.

Q) When you find a bug what is the process for fixing it?

When a bug is found, we first make sure that we can easily repeat it, and then the steps are written down in a task that we add to our task management system.

"This system contains almost 30,000 tasks, and around 6,000 of those are potential changes we would like to make in the future or are currently working on. The very first task was added to this system way back in 2001!"

Once the bug is in our system, it gets prioritized, then assigned to a developer to investigate and fix. It will then be sent to the QA team, who first checks that they can reproduce the issue in the version of Echoview it was found in. Then it is checked in the latest version, and if the bug is fixed the task can be closed or sent to the Documentation team. If there are further issues with it, the bug will be sent back to the developer. This can happen several times, especially if the bug is complicated.

Q) Describe a day in the life of your team?

At an early stage in a new release, a day is likely to include someone working on improving our internal process, such as updating test plans that we use regularly, or adding new features to our automated regression test software.

Another team member may be discussing upcoming new features with developers to check requirements and ensure that design issues are spotted early in the process. This will also involve setting up a test plan that covers the requirements we decide on as a team.

Later in the release, once new features have been added to the program, the QA team will each begin running through test plans for features they have been assigned to check. At this stage, we also set up data and EV files that will be used for the tests. If a feature that a QA team member is working on has problems, they will move on to test a different feature or bug fix after a discussion with the developer, who then takes back the task to add further improvements.

Towards the end of a release, the QA team will be finishing off testing for the last features that will be added to the release and adding new tests to our automated regression test software. This is the time where we also run through some more general test plans, which are done every release to make sure that the new features we added did not change something unexpected.

Q) Can you provide an example of the work involved in one feature change?

Some new features, such as operators, are fairly isolated from the rest of the program, and QA will be based on a particular test plan we have already created. For a new operator, we usually need to test at least the following:

- The results of the algorithm, using a variety of different operator settings and input values

- The variable data types that can be used as operands

- How the operator interacts with other objects on the Dataflow

- The speed at which the operator performs

Other types of features can require a lot more work to set up a test plan, especially if they are very different to features we have added previously. Many additional tests may be necessary to cover how the feature integrates with the rest of the program. These tests can sometimes be scripted, but often must be done manually. Visual design will also be considered for any new user interface elements, and this can involve lots of discussion with multiple team members.

After a feature has been verified, we then need to add tests to either our automated regression test program, or our more general test plans that are run through manually each release.

Acknowledgements: Thank you for your time, Alison and Team!